Section: New Results

Augmented Reality

Perception in augmented reality

AR Feels “Softer” than VR: Haptic Perception of Stiffness in Augmented versus Virtual Reality

Participants: Yoren Gaffary, Benoît Le Gouis, Maud Marchal, Ferran Argelaguet, Anatole Lécuyer and Bruno Arnaldi

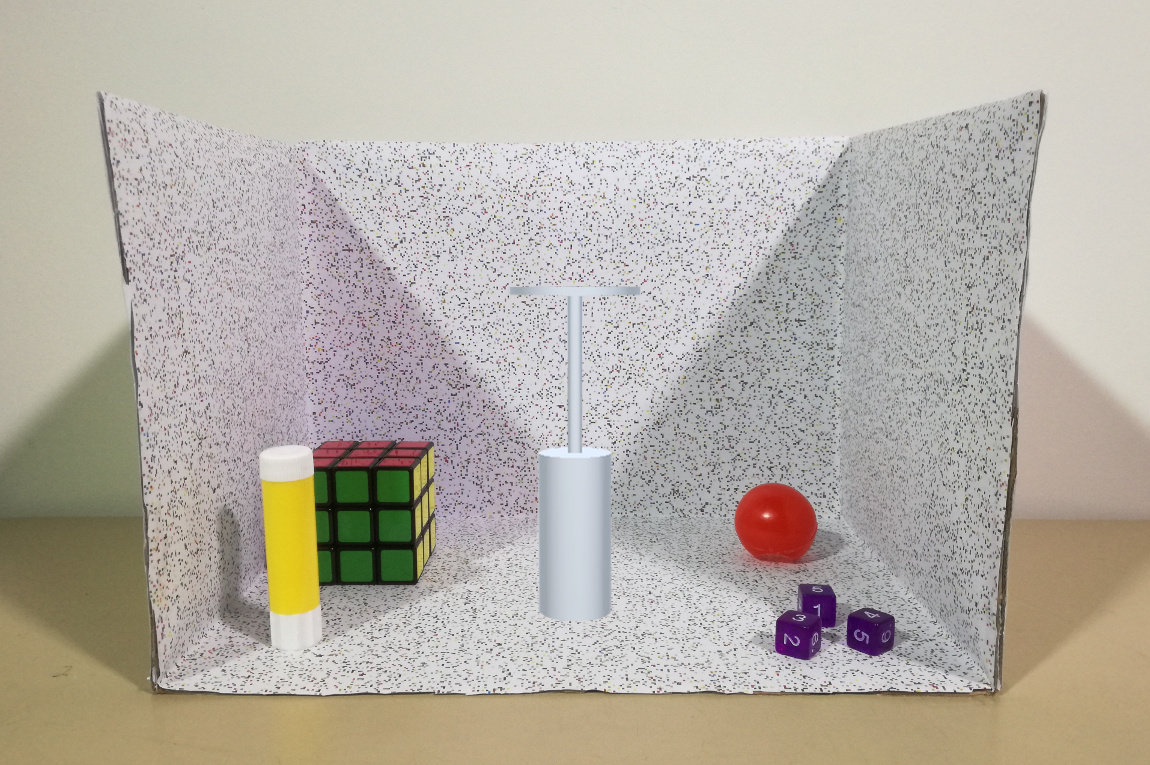

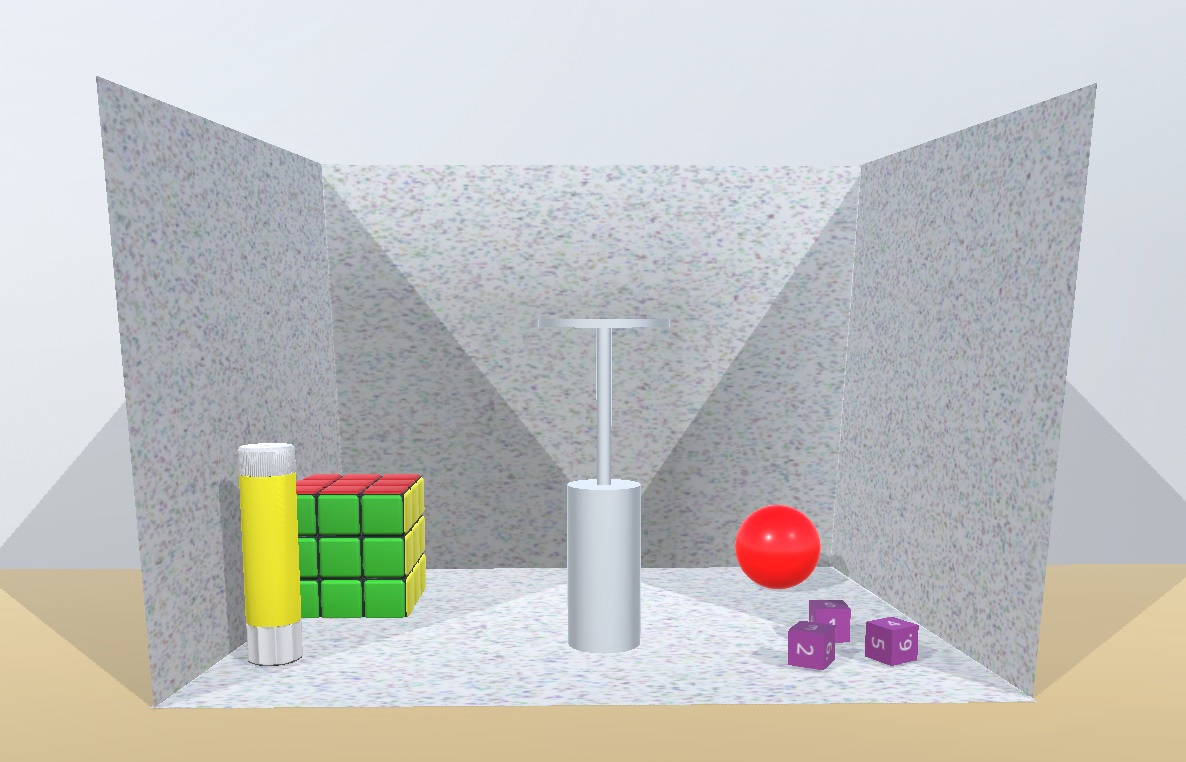

Does it feel the same when you touch an object in Augmented Reality (AR) or in Virtual Reality (VR)? In [3] we studied and compared the haptic perception of stiffness of a virtual object in two situations: (1) a purely virtual environment versus (2) a real and augmented environment. We have designed an experimental setup based on a Microsoft HoloLens and a haptic force-feedback device, enabling to press a virtual piston, and compare its stiffness successively in either Augmented Reality (the virtual piston is surrounded by several real objects all located inside a cardboard box) or in Virtual Reality (the same virtual piston is displayed in a fully virtual scene composed of the same other objects). We have conducted a psychophysical experiment with 12 participants. Our results show a surprising bias in perception between the two conditions. The virtual piston is on average perceived stiffer in the VR condition compared to the AR condition. For instance, when the piston had the same stiffness in AR and VR, participants would select the VR piston as the stiffer one in 60% of cases. This suggests a psychological effect as if objects in AR would feel ”softer” than in pure VR. Taken together, our results open new perspectives on perception in AR versus VR, and pave the way to future studies aiming at characterizing potential perceptual biases.

|

Interaction in augmented reality

Evaluation of Facial Expressions as an Interaction Mechanism and their Impact on Affect, Workload and Usability in an AR game

Participants: Jean-Marie Normand and Guillaume Moreau

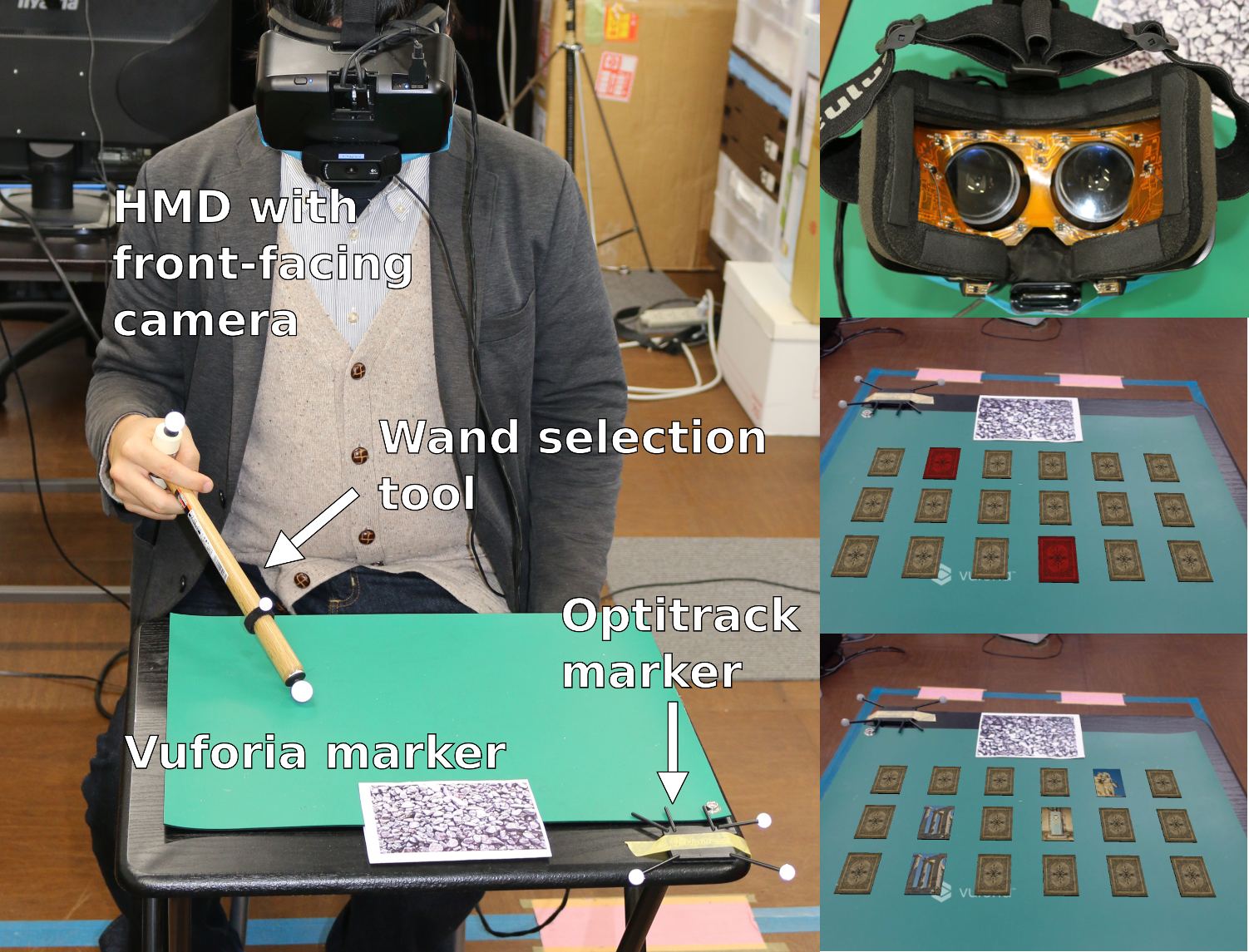

With the recent development of Head Mounted Display (HMD) for Virtual Reality (VR) allowing to track and recognize user's Facial Expression (FE)s in real-time, we investigated the impact that the use of FEs as an action-trigger input mechanism (e.g. a FE mapped to a single action) has on our emotional state; as well as their workload and usability compared to the use of a controller button. In [23] we developed an Augmented Reality (AR)-based memory card where the users select virtual cards using a wand and flip them using either a FE (smiling; frowning) or a Xbox controller button. The users were separated into three groups: (1) flipping the card with a smile (n = 10); (2) flipping the card with a frown (n = 8) and (3) flipping the cards with the Xbox controller button (n = 11). We did not see any significant differences between our groups in: (i) the positive and negative affect of the participants and (ii) the reported workload and usability, thus highlighting that the FEs could be used inside a HMD in the same way as a controller button.

|

This work was done in collaboration with the Interactive Media Lab of Keio University (Japan).

A Sate-of-the-Art on the Combination of Brain-Computer Interfaces and Augmented Reality

Participants: Hakim Si-Mohammed, Ferran Argelaguet and Anatole Lécuyer

We have reviewed the state-of-the art of using Brain-Computer Interfaces in combination with Augmented Reality (AR) [22]. In this work, first we introduced the field of AR and its main concepts. Second, we described the various systems designed so far combining AR and BCI categorized by their application field: medicine, robotics, home automation and brain activity visualization. Finally, we summarized and discussed the results of our survey, showing that most of the previous works made use of P300 or SSVEP paradigms with EEG in Video See-Through systems, and that robotics is a main field of application with the highest number of existing systems.

This work was done in collaboration with MJOLNIR team.

Tracking

Increasing Optical Tracking Workspace of VR Applications using Controlled Cameras

Participants: Guillaume Cortes, Anatole Lécuyer

We have proposed an approach to greatly increase the tracking workspace of VR applications without adding new sensors [15]. Our approach relies on controlled cameras able to follow the tracked markers all around the VR workspace providing 6DoF tracking data. We designed the proof-of-concept of such approach based on two consumer-grade cameras and a pan-tilt head. The resulting tracking workspace could be greatly increased depending on the actuators' range of motion. The accuracy error and jitter were found to be rather limited during camera motion (resp. 0.3cm and 0.02cm). Therefore, whenever the final VR application does not require a perfect tracking accuracy over the entire workspace, we recommend using our approach in order to enlarge the tracking workspace.

|

This work was done in collaboration with LAGADIC team.

An Optical Tracking System based on Hybrid Stereo/Single-View Registration and Controlled Cameras

Participants: Guillaume Cortes, Anatole Lécuyer

Optical tracking is also widely used in robotics applications such as unmanned aerial vehicle (UAV) localization. Unfortunately, such systems require many cameras and are, consequently, expensive. We proposed an approach to increase again the optical tracking volume without adding cameras [14]. First, when the target becomes no longer visible by at least two cameras we propose a single-view tracking mode which requires only one camera. Furthermore, we propose to rely again on controlled cameras able to track the UAV all around the volume to provide 6DoF tracking data through multi-view registration. This is achieved by using a visual servoing scheme. The two methods can be combined in order to maximize the tracking volume. We propose a proof-of-concept of such an optical tracking system based on two consumer-grade cameras and a pan-tilt actuator and we used this approach on UAV localization.

|

This work was done in collaboration with LAGADIC team.